Defeating Deepfakes at the Source

Deepfake photos and videos increasingly warp our sense of reality. No, the president of Ukraine did not surrender to Russia, as suggested by a manipulated video of Volodymyr Zelenskyy. No, Nancy Pelosi of the U.S. House of Representatives did not slur her words, despite widespread circulation of a deepfake video that distorted her speech. There are countless other examples of such deepfakes — some are merely for entertainment, while others are more sinister and raise serious concerns.

“By analyzing existing videos and producing new frames that mimic genuine ones, increasingly sophisticated deepfake methods can fabricate very realistic – yet totally bogus – videos or photos,” explains Ardalan Amiri Sani, a computer science professor in the UC Irvine Donald Bren School of Information and Computer Science (ICS). “Deepfake technology has been abused to spread disinformation, and perpetrators are producing increasingly convincing videos with improving tools.”

To address this, he and collaborators from UCI and Microsoft Research take an unconventional approach to tackling deepfakes.

A New Approach

Deepfakes are currently detected by recognizing certain known flaws in manipulated or fabricated photos or videos. Unfortunately, deepfake technology is advancing rapidly, thus making detection difficult. Detection techniques play a catch-up game. “As deepfake techniques improve, it becomes more difficult to detect deepfakes by just analyzing the content,” says Prof. Amiri Sani. This results in the familiar and never-ending “arms race.”

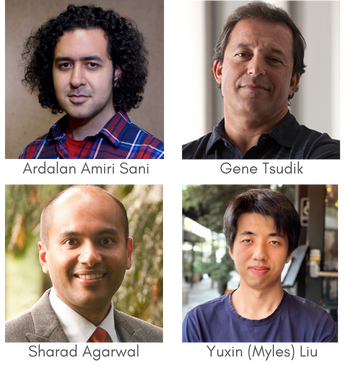

In contrast, Prof. Amiri Sani and his collaborators focus on a prevention-based approach that provides verifiable cryptographic provenance for photos and videos captured by cameras, especially on smartphones. The team consists of UCI Ph.D. students Yuxin (Myles) Liu, Yoshimichi Nakatsuka, and Mingyi Chen; ICS faculty Amiri Sani and Gene Tsudik; Microsoft researcher Sharad Agarwal; and NJIT faculty member Zhihao (Zephyr) Yao (previously a Ph.D. student at UCI).

“The truth is that these things are easily manipulated by fancy AI algorithms and programs,” says Prof. Tsudik. “With security, there is often a kind of an arms race between those who create fakes, and those who detect them. Instead, what we set out to do is to securely attest to things that are not fake.”

This approach also addresses emerging dangers posed by generative AI. “Both photos and videos produced by generative AI can be passed for authentic content, and that is becoming a more urgent threat because the barrier for someone to use generative AI is much lower than the barrier to use deepfake technology,” stresses Dr. Agarwal, noting that the team’s underlying technology is equally applicable in both scenarios.

He also observes that visual content is now a critical part of our automated lives, beyond just news and social networks. “Visual media are used to automate border passport control, driving, assisting visually impaired humans, home security, industrial safety, and remote meetings — which can cover high stakes agendas such as national defense,” he says. “Proving the authenticity of this content will allow us to rely more on automation and unlock productivity gains for society.”

Verifying Videos and Proving Authenticity

The team built a system called Vronicle, which generates and uses verifiable provenance information for videos, especially those captured by mobile devices. Using ubiquitous Trusted Execution Environments (TEEs) for video capture and post-processing, Vronicle helps construct fine-grained provenance information so that consumers can verify various aspects of a video.

This work is outlined in “Vronicle: Verifiable Provenance for Videos from Mobile Devices,” a paper published at ACM MobiSys 2022, a top-tier conference on mobile computing.

“Our approach is to forget the arms race,” says Prof. Tsudik, “and instead to have a secure way of making the content verifiable.” Viewers will then know that such content comes from a trusted camera source, confirming its authenticity.

Another development from the same team is a secure camera module, called ProvCam, that generates a cryptographic “proof of authenticity” for recorded videos within the camera itself. This significantly reduces the Trusted Computing Base (TCB), compared to Vronicle, and improves overall security. This work is reflected in a recently accepted paper, which will be presented at ACM MobiCom 24, another top-tier conference in mobile computing.

“Once provenance solutions become available, attackers will try to compromise the provenance generation framework in order to generate verifiable provenance for their deepfake content,” says Prof. Amiri Sani. “This is why we are trying to make the framework super secure, raising the bar for such attacks.”

The team is currently pursuing several research directions to improve security and performance of their provenance-based approach.

“With these two results (Vronicle and ProvCam), we aim to restore people’s trust towards photos and videos as reliable information sources in the digital age,” says Liu, “where ‘seeing is believing’ should ideally still hold true.”

— Shani Murray