| Introduction | In this lecture, we will discuss a variety of general issues in program simplification. It introduces no new Python programming features, but instead steps back to provide a perspective on one very important programming skill that all competent programmers must acquire. Sometimes simplifying a program is the only way to comprehend it, and hence debug it. Finally, this lecture shows that programs themselves can be studied and manipulated in a formal way (just as expressions are manipulated in algebra). |

| Simplification |

We can use the laws of algebra to tell whether two forms are equivalent: using

either one produces the same result.

Thus, equivalence is a mathematical topic.

But as programmers, we must judge which form (the simpler one) to use in our

programs.

The simplest program is the one that is easiest to read, debug, and maintain. Thus, simplicity is a psychological topic. As a rule of thumb, smaller forms are often easier to understand (although sometimes a bit ofredundancy makes forms easier to understand: smtms lss s nt mr). In this section we will examine three kinds of algebras for proving equivalences

|

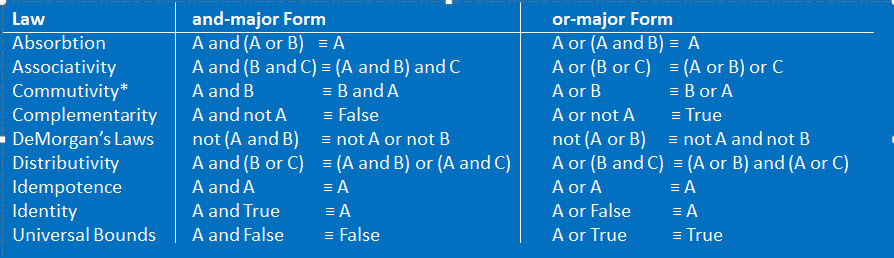

| Boolean Algebra | Boolean Algebra concerns equivalences involving the bool type and the logical operators. The following is a list of useful laws (theorems, if you will) of Boolean Algebra. The most practical law is DeMorgan's law: one form explains how to simplify the negation of a conjunction (and) and the other form explains how to simplify the negaion of a disjunction (or). |

|

We can easily prove laws in Boolean Algebra by trying every combination of

the True and False values for each of the variables.

For example, to prove the conjunctive version of DeMorgan's law, we can start

with the following table of values, called a truth table.

Here we list all the variables in the leftmost columns, and the two

expressions -which we hope to prove equivalent- in the rightmost columns.

We then fill in each column by just computing the values of the expressions (using the semantics of the operators and our knowledge of evaluating expressions: e.g., operator precedence and associativity).

The law is proved if the columns under the two expressions always contain the same pair of values on each line. This means that for every pair of operands, the expressions compute the same result, so the expression are equivalent and thus interchangable in our code. This approach also illustrates a divide and conquer strategy to proofs: we divide the complicated proof into four different parts (each a line in the truth table); each line is easy to verify by pure calculation; once we verify all of the lines, we have verified the entire proof. |

| Relational Algebra |

Relational Algebra concerns equivalances mostly involving the int and

float types and the numeric and relational operators.

It is based on the law of trichotomy.

As a first example, we will prove that max(x,y)+1 is equivalent to max(x+1,y+1). So, we can factor additive constants out of calls to the max function.

Here, knowing the relationship between x and y allows us to compute the answer for each expression. For example, if we know that x<y, we know max(x,y) evaluates to y, so max(x,y)+1 evaluates to y+1; likewise, if we know that x<y, we know that x+1<y+1, so max(x+1,y+1) evaluates to y+1. The other two cases can be simplified similarly. Again, the law is proved if the columns under the two expressions always have the same pair of values on each line. This means that for every pair of values, the expressions compute the same result, so the expressions are interchangable. As another example, we can prove that the expression x<0 == y<0 is equivalent to (x<0 and y<0) or (x>=0 and y>=0), which is True when x and y have the same sign (assuming the sign of 0 is considered positive). Here we need to list all possibilities of how x and y compare to 0.

So again, the equivalence it proven. Here is an interesting case where the smaller/simpler/more efficient code is not necessarily easier to understand. Most students believe the larger expression is easier to understand, until they intensely study the meaning of == when applied to boolean values generated by relational operators. In actual code, I would suggest using the simpler form, and then include the larger -easier to understand- form in a comment; we could even include the above proof as part of the comment. Finally we can use the law of trichotomy to prove that we simplify the negation of relational operators. Be careful when you do so, and recall the law of trichotomy. If it is not true that x is less than y, then there are two possiblities left: x is equal to y or x is greater than y. Beginning programmers make the mistake of negating x<y as x>y, but the correct equivalent expression is x>=y

In fact, we can use the same kinds of proofs to show how to simplify the negation of every relational operator.

Also notice that each of the relational operators is equivalent to an expression that includes only the < relational operator (along with sone logical operators). Although we don't need the five other relational operators to write Python programs (in a mathematical sense), they are provided because most programmers are aware of them and can use them to write simpler programs (in a psychological sense). Thus the Python language is made larger (more operators) to make it easier for human minds to use. Such value judgements (which is better, a smaller language or a language easier for humans to use) are required by programming language designers. Finally, we can often simplify negatated relational operators in DeMorgan's Laws simplifications. For example, we can "simplify" not (x < 0 or y > 10) to not (x < 0) and not (y > 10) which is actually a bit larger, but can be further simplified to x >= 0 and y <= 10 which is truly simpler. |

| Control Structure Algebra |

Now we will explore the richest algebra: the algebra of control structures.

First we will examine when changing the order in a sequence of statements

doesn't change the results of executing the sequence.

Second we will discuss transformations to if statements.

Finally, we will discuss transformations to while loops containing

break statements.

Sequence equivalencesWe start by examining the orderings of statements and defining two important terms.

if x > y: #Evaluates x and y

z = x #Rebinds z; evaluates x

else:

y++ #Rebinds and evaluates y

we say that the if statement rebinds z and y; it evaluates

x and y.

This accounts for everything the if statement might do when exeucted

(even though, for example, sometimes it rebinds only x and sometimes

only y: it depends on the result of the test).

Note that we will also say print("...") rebinds into the console

because this function causes new information to appear inside the console

window (changing its state); this is a bit of a stretch.

Armed with this terminology, we can define indepdendent statements in a sequence. Two statements are independent if we can exchange their order and they still always compute equivalent results. Two statements S1 and S2 are independent if S1 rebinds and evaluates no names that S2 rebinds, and S2 rebinds and evaluates no names that S1 rebinds. Or, more simply two statements are independent (and we can reverse their order) if neither rebinds a name the other rebinds or evaluates.

For example, we can exchange the order of

Obviously, changing the order of two statements doesn't simplify anything; but we will learn that such exchanges can sometimes enable further "real" simplifications.

Boolean Assignment:

Let's examine various pairs of statements that we can prove equivalent by

using these modified truth tables.

The first involves storing a bool value into a name.

For the two statements

Again, because both columns show the same statements executed, regardless of the value of test, the if/else statement on the left and expression statement on the right are equivalent.

Test Reversal:

This equivalence shows that we can negate the test AND the order of

the two statements inside the if/else and the resulting if/else

statement is equivalent to the first.

Which means that the following two if/else statements are equivalent.

I'd argue that the one on the right is simpler and easier to understand,

because it is easier to see that it is an if/else statement: our

eyes can see that loking just at the top three lines.

In the version on the left, the small else clause is almost hidden

at the bottom.

Bottom Factoring:

This equivalence shows that we can always remove (factor) a common

statement out from the bottom of an if/else statement.

It is similar to algebraically factoring AX+BX into (A+B)X.

For example, the code on the top is equivalent to the code on the bottom.

Top Factoring:

This equivalence is shows that we can often remove a common statement

out from the front of an if/else statement.

It is similar to algebraically factoring XA+XB into X(A+B).

But there is one more detail to take care of.

Now we are executing statementc before the if/else,

which means it is executed before the test expression is evaluated.

This is guaranteed to work only if the statement and test are independent (as

defined earlier).

Because a test only evaluates names, we can simplify this to

statementc must not redefine a name evaluated in

test

In the example below, the code on the top is NOT equivalent to the code on the

bottom.

On the other hand, sometimes even if the expression and statement are not

independent, we can modify the test to account for the statement always

executing before the if/else statement.

The code on the top here is equivalent to the code on the bottom (we can prove

it using the law of trichotomy: zcc ? 0).

|

| Pragmatics |

The formal techniques presented and illustrated above allow us to prove that

two programming forms are equivalent.

It is then up to us to determine which form is simpler, and use it in our

programs.

As a rule of thumb, the smaller the code the simpler it is.

Also, code with fewer nested statements is generally simpler.

More generally, we should try to distribute complexity. So, when decidiing between two equivalences, choose the one whose most complicated statement is simplest. If you ever find yourself duplicating code, there is an excellent chance that some simplification will remove this redundancy. Beginners are especially prone to duplicating large chunks of code, missing the important simplification. We should aggressively simplify our code while we are programming. We will be amply rewarded, because it is easier to add more code (completing the phases of the enhancements) to an already simplified program. If we wait until the program is finished before simplifying...well, we many never finish the program because it has become too complex; if we do finish, the context in which to perform each simplification will be much bigger and more complex, making it harder to simplify. Excessive complexity is one of the biggest problems that a software engineer faces. Generally, I try not to get distracted when I am writing code; but one of the few times that I will stop writing code is when I see a simplification. I know that in the long run, taking time to do a simplification immediately will likely allow me to finish the program faster. |

| Problem Set |

To ensure that you understand all the material in this lecture, please solve

the the announced problems after you read the lecture.

If you get stumped on any problem, go back and read the relevant part of the lecture. If you still have questions, please get help from the Instructor, a TA, or any other student.

|